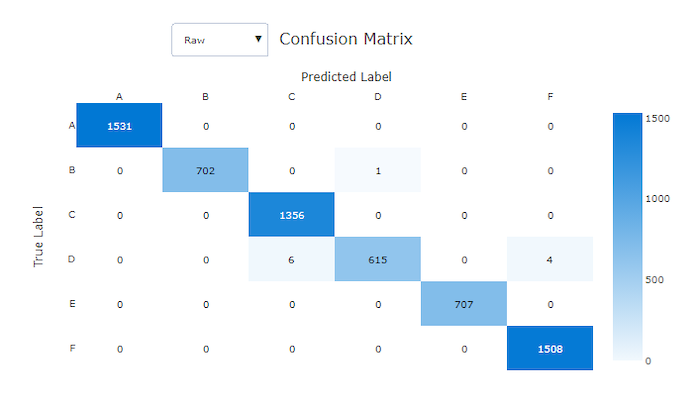

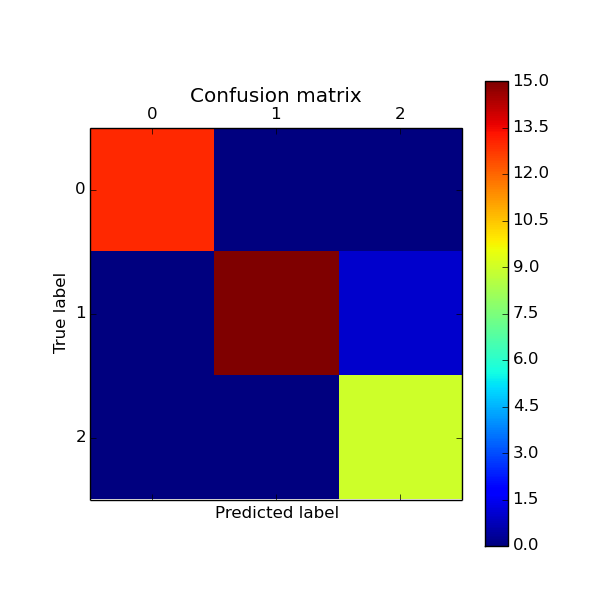

software recommendation - Python library that can compute the confusion matrix for multi-label classification - Data Science Stack Exchange

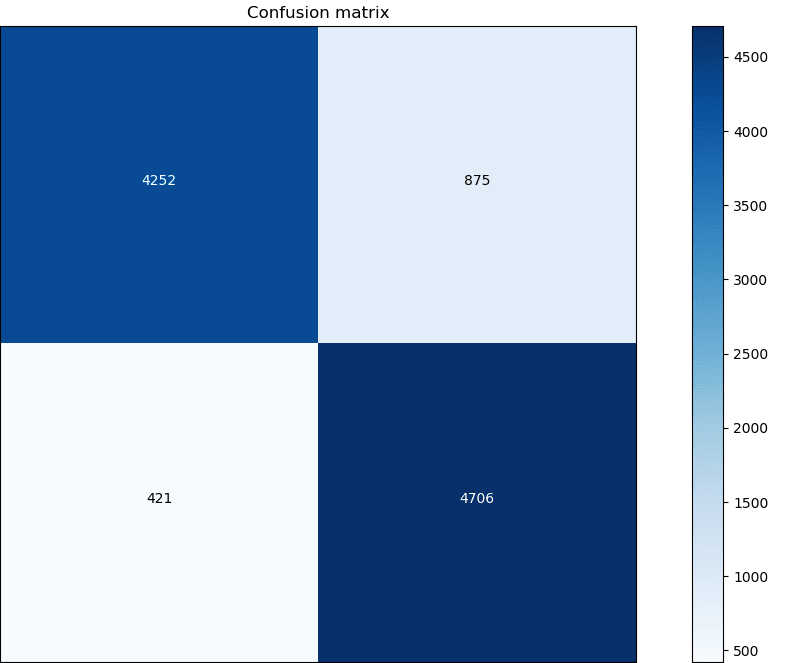

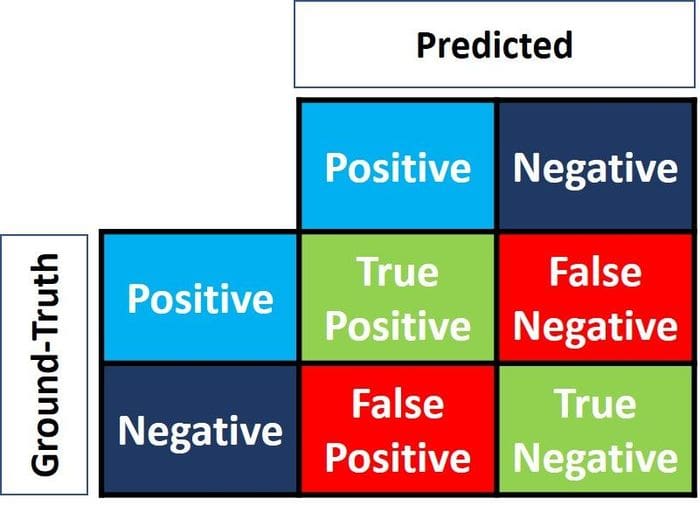

python - Scikit-learn: How to obtain True Positive, True Negative, False Positive and False Negative - Stack Overflow

python - Scikit-learn: How to obtain True Positive, True Negative, False Positive and False Negative - Stack Overflow

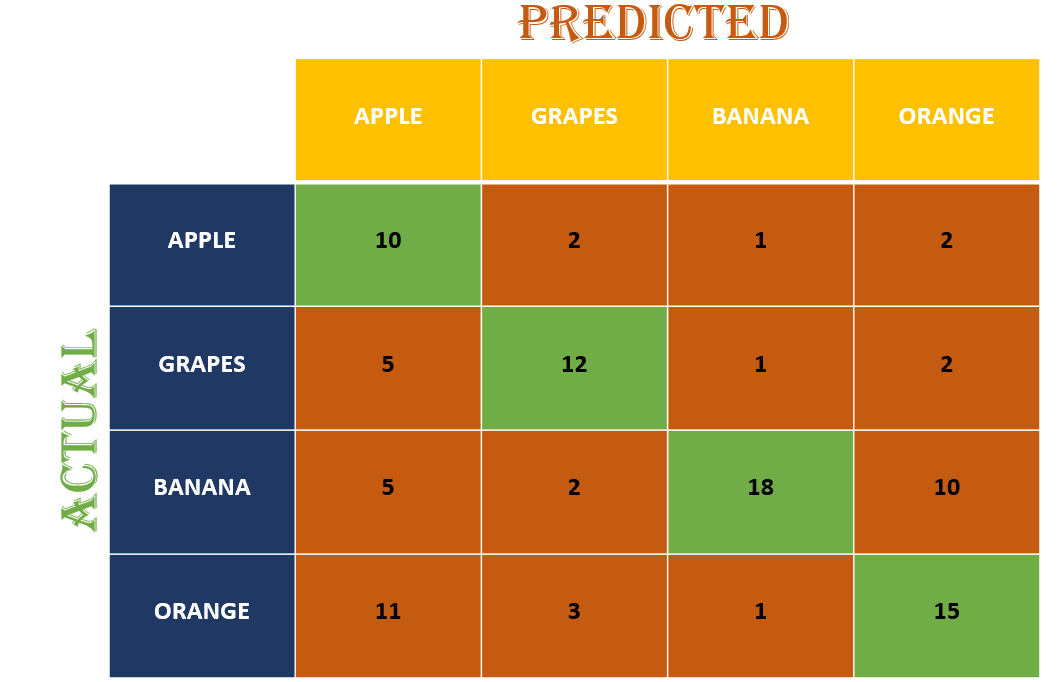

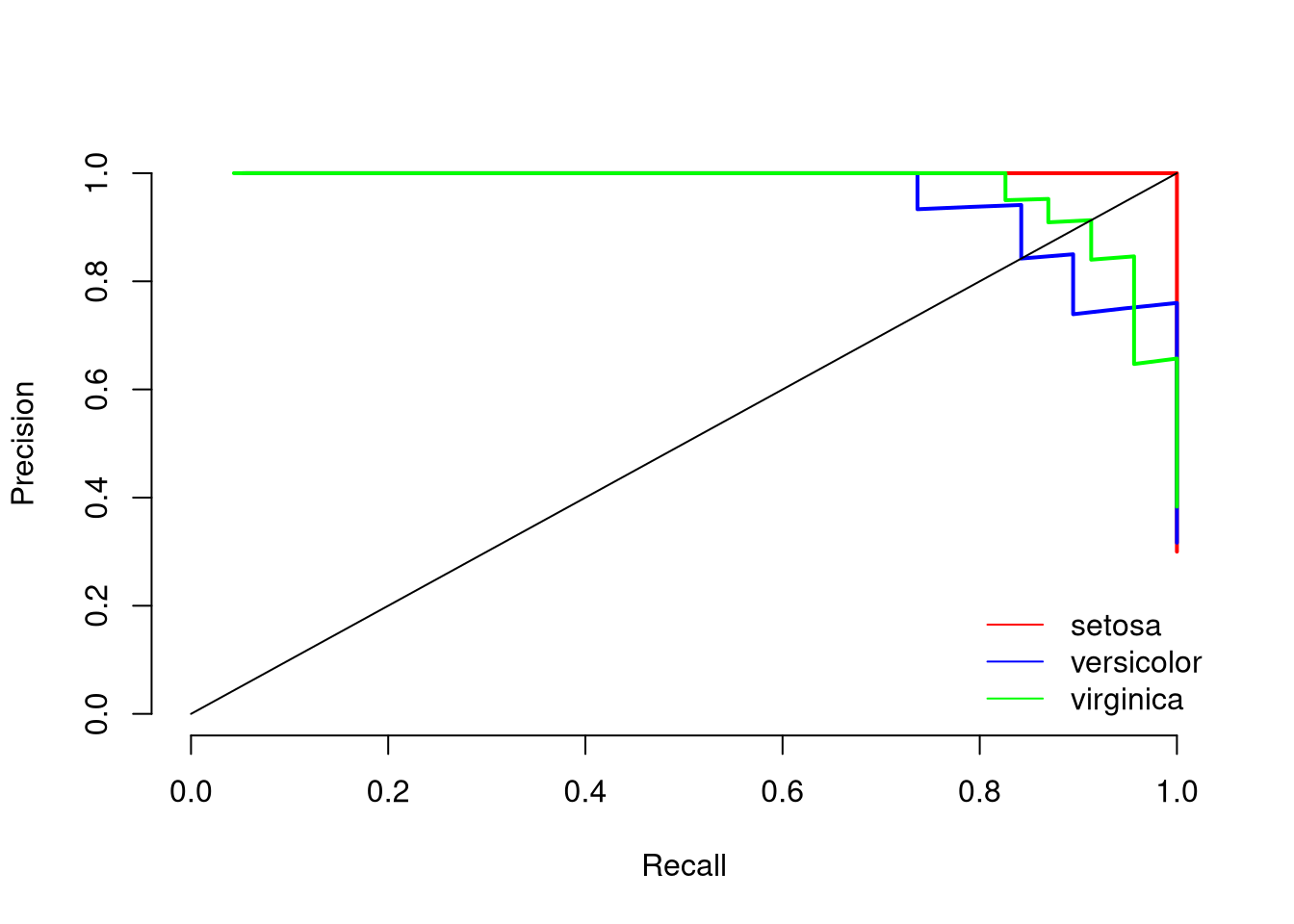

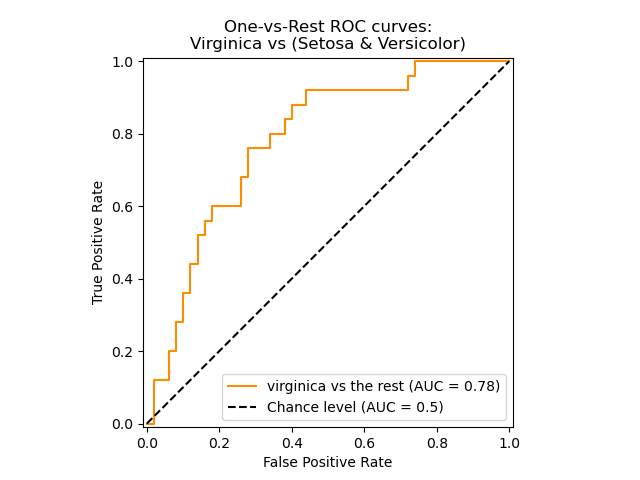

Multi-class Classification: Extracting Performance Metrics From The Confusion Matrix | by Serafeim Loukas | Towards Data Science

Confusion Matrix | ML | True Positive | True Negative | False Positive | False Negative - P1 - YouTube